Understanding Callback Queue Starvation in Asynchronous Programming

In the realm of asynchronous programming, where tasks are executed independently and concurrently, lies a subtle yet critical issue known as "Callback Queue Starvation." While the concept might sound intimidating, its impact can be profound, affecting the performance and reliability of your applications.

What is Callback Queue Starvation?

To grasp the essence of callback queue starvation, let's first delve into the basics of asynchronous programming. In asynchronous environments, tasks are executed independently of the main program flow. Callback functions are employed to handle the results of asynchronous operations once they are completed.

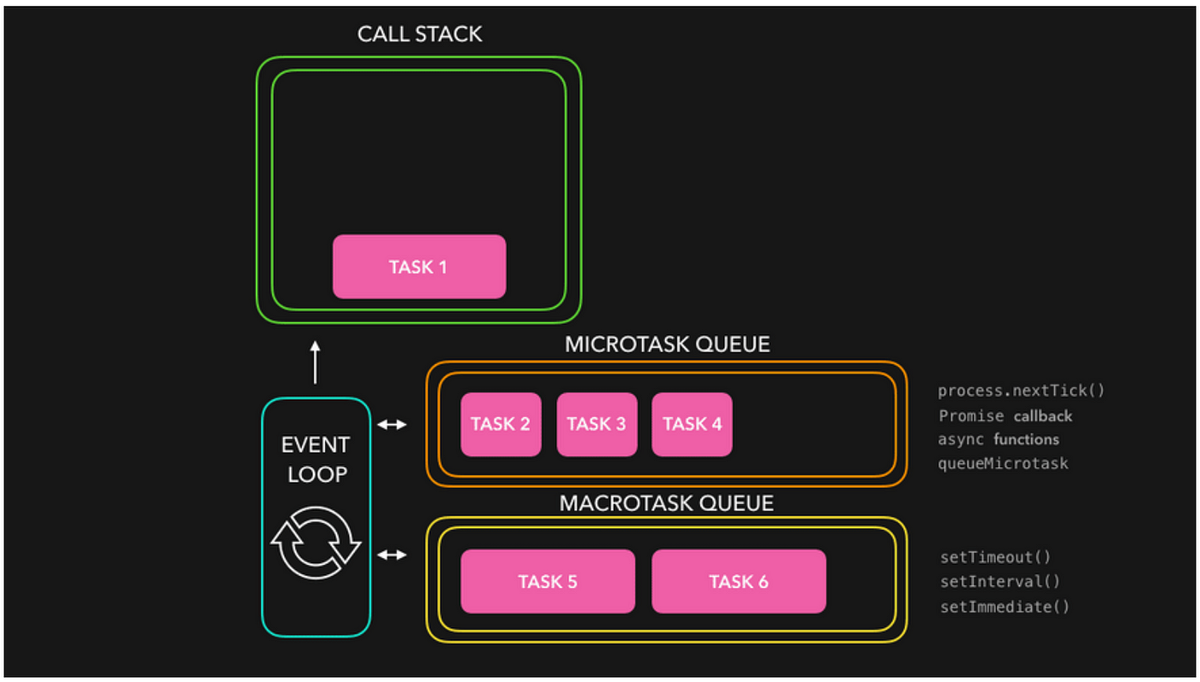

Callback queue starvation occurs when the callback queue, responsible for holding callback functions awaiting execution, is overloaded or blocked by long-running tasks. In simpler terms, if tasks take too long to complete, they can clog up the callback queue, causing subsequent callbacks to be delayed or even abandoned. To understand this we have to know the difference between the Callback Queue and the Microtask Queue

Callback Queue vs. Microtask Queue

The callback queue, responsible for holding callback functions awaiting execution, is a fundamental component of the event loop. It manages asynchronous tasks that are ready to be processed once the call stack is empty. On the other hand, the microtask queue is a special queue that holds microtasks, which have a higher priority than regular callbacks.

Example: Fetch Calls and Callback Queue Starvation

Consider the scenario where multiple fetch calls are made sequentially, each with its promise chain. Let's simulate this scenario with the code:

// Simulating multiple fetch calls

fetch('https://api.example.com/data1')

.then(response => response.json())

.then(data => {

console.log('Data 1:', data);

return fetch('https://api.example.com/data2');

})

.then(response => response.json())

.then(data => {

console.log('Data 2:', data);

return fetch('https://api.example.com/data3');

})

.then(response => response.json())

.then(data => {

console.log('Data 3:', data);

});

In this example, each fetch call initiates an asynchronous operation, and the subsequent .then() handlers are queued up to process the responses. However, if any of these fetch operations take a considerable amount of time, they can block the callback queue, causing subsequent callbacks to be delayed.

Microtask Queue Priority

To illustrate the priority of the microtask queue over the callback queue, let's introduce a simple console log wrapped in a Promise:

// Adding a microtask with Promise

Promise.resolve().then(() => {

console.log('Microtask executed');

});

Despite being queued after the fetch calls, the microtask will be executed before any subsequent callbacks in the callback queue. This is because microtasks have a higher priority than regular callbacks, ensuring timely execution.

The Hidden Impact

The consequences of callback queue starvation can be subtle, yet pervasive:

- Delayed Responses: Callback functions intended to handle the results of asynchronous operations may experience significant delays, leading to poor user experience and degraded performance.

- Unpredictable Behavior: Asynchronous operations may not behave as expected, causing erratic application behavior and making debugging a challenging endeavor.

- Resource Waste: Long-running tasks monopolizing the event loop can lead to inefficient resource utilization, potentially affecting the scalability and reliability of the application.

Identifying and Mitigating Callback Queue Starvation

Detecting callback queue starvation requires careful monitoring and analysis of application performance. Here are some strategies to mitigate its impact:

- Task Segmentation: Break down long-running tasks into smaller, more manageable chunks. This allows the event loop to handle other tasks efficiently and prevents the callback queue from becoming overwhelmed.

- Prioritization: Implement mechanisms to prioritize critical callbacks over less important ones. This ensures that essential operations are promptly handled, even in the presence of long-running tasks.

- Concurrency Limits: Enforce concurrency limits to prevent excessive resource consumption by limiting the number of concurrent tasks. This helps maintain a balanced workload and prevents callback queue congestion.

- Asynchronous Patterns: Utilize asynchronous patterns such as Promises, async/await, or event emitters to streamline asynchronous operations and minimize callback queue blocking.

Conclusion

Callback queue starvation might not be as conspicuous as other performance bottlenecks, but its impact can be far-reaching. By understanding the nuances of asynchronous programming and employing effective mitigation strategies, developers can ensure that their applications remain responsive, reliable, and scalable in the face of varying workloads.

In the ever-evolving landscape of software development, staying vigilant against hidden challenges like callback queue starvation is essential for delivering robust and high-performing applications.

Comments ()